Hi everyone! Welcome to my blog where I share my passion for coding and learning new things. Today I want to talk about a very useful Python library called BeautifulSoup. If you are interested in web scraping, you should definitely check it out!

What is web scraping?

- Web scraping is a technique to extract data from websites. For example, you can use web scraping to get the latest news headlines, product prices, stock quotes, weather forecasts, etc. Web scraping can be very helpful for data analysis, research, or just for fun.

But how do you web scrape?

- Well, you need two things: a way to request web pages and a way to parse the HTML content of those pages. That’s where BeautifulSoup comes in. BeautifulSoup is a Python library that makes it easy to parse HTML and extract the information you want.

Steps involved in web scraping:

- Find the URL of the webpage that you want to scrape

- Select the particular elements by inspecting

- Write the code to get the content of the selected elements

- Store the data in the required format

The popular libraries/tools used for web scraping are:

- Selenium — a framework for testing web applications

- BeautifulSoup — Python library for getting data out of HTML, XML, and other markup languages

- Pandas — Python library for data manipulation and analysis

Installation part

Install Beautiful Soup in Windows and MacOS.

pip install BeautifulSoup4If you are using Linux (Debian) you can install it with following command

# (for Python 2)

`$ apt-get install python-bs4`# (for Python 3)

`$ apt-get install python3-bs4`When you install it, be it in Windows, MacOS or Linux, it gets stored as BS4.

You can import BeautifulSoup from BS4 library using following command

from bs4 import BeautifulSoupScrape a Website With This Beautiful Soup

Make use of your Jupyter Notebook.

Next, import the necessary libraries:

from bs4 import BeautifulSoup

import requestsFirst off, let’s see how the requests library works:

from bs4 import BeautifulSoup

import requestswebsite = requests.get('http://somewebpages.com')

print(website)When you run the code above, it returns a 200 status, indicating that your request is successful. Otherwise, you get a 400 status or some other error statuses that indicate a failed GET request.

Remember to always replace the website’s URL in the parenthesis with your target URL.

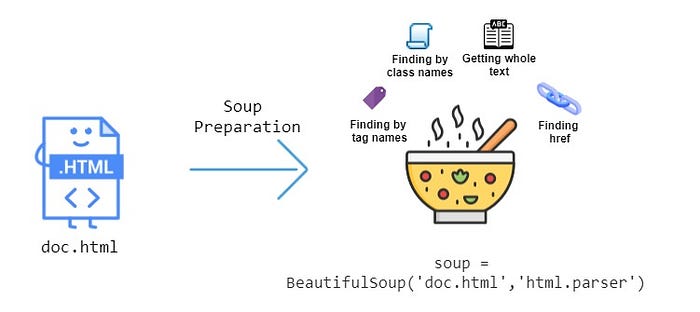

Once you get the website with the get request, you then pass it across to Beautiful Soup, which can now read the content as HTML or XML files using its built-in XML or HTML parser, depending on your chosen format.

Take a look at this next code snippet to see how to do this with the HTML parser:

from bs4 import BeautifulSoup

import requests

website = requests.get('http://somewebpages.com')

soup = BeautifulSoup(website.content, 'html.parser')

print(soup)The code above returns the entire DOM of a webpage with its content.

You can also get a more aligned version of the DOM by using the prettify method. You can try this out to see its output:

from bs4 import BeautifulSoup

import requests

website = requests.get('http://somewebpages.com/')

soup = BeautifulSoup(website.content, 'html.parser')

print(soup.prettify())You can also get the pure content of a webpage without loading its element with the .text or .string method:

from bs4 import BeautifulSoup

import requests

website = requests.get('http://somewebpages.com/')

soup = BeautifulSoup(website.content, 'html.parser')

print(soup.text)

print(soup.string)How to Scrape the Content of a Webpage by the Tag Name

You can also scrape the content in a particular tag with Beautiful Soup. To do this, you need to include the name of the target tag in your Beautiful Soup scraper request.

For example, let’s see how you can get the content in the h2 tags of a webpage.

from bs4 import BeautifulSoup

import requests

website = requests.get('http://somewebpages.com/')

soup = BeautifulSoup(website.content, 'html.parser')

print(soup.h2)In the code snippet above, soup.h2 returns the first h2 element of the webpage and ignores the rest. To load all the h2 elements, you can use the find_all built-in function and the for loop of Python:

from bs4 import BeautifulSoup

import requests

website = requests.get('http://somewebpages.com/')

soup = BeautifulSoup(website.content, 'html.parser')

h2tags = soup.find_all('h2')

for soups in h2tags:

print(soups)That block of code returns all h2 elements and their content. However, you can get the content without loading the tag by using the .string method:

from bs4 import BeautifulSoup

import requests

website = requests.get('http://somewebpages.com/')

soup = BeautifulSoup(website.content, 'html.parser')

h2tags = soup.find_all('h2')

for soups in h2tags:

print(soups.string)You can use this method for any HTML tag. All you need to do is replace the h2 tag with the one you like.

However, you can also scrape more tags by passing a list of tags into the find_all method. For instance, the block of code below scrapes the content of a, h2, and title tags:

from bs4 import BeautifulSoup

import requests

website = requests.get('http://somewebpages.com/')

soup = BeautifulSoup(website.content, 'html.parser')

tags = soup.find_all(['a', 'h2', 'title'])

for soups in tags:

print(soups.string)How to Scrape a Webpage Using the ID and Class Name

After inspecting a website with the DevTools, it lets you know more about the id and class attributes holding each element in its DOM. Once you have that piece of information, you can scrape that webpage using this method. It’s useful when the content of a target component is looping out from the database.

You can use the find method for the id and class scrapers. Unlike the find_all method that returns an iterable object, the find method works on a single, non-iterable target, which is the id in this case. So, you don’t need to use the for loop with it.

Let’s look at an example of how you can scrape the content of a page below using the id:

from bs4 import BeautifulSoup

import requests

website = requests.get('http://somewebpages.com/')

soup = BeautifulSoup(website.content, 'html.parser')

id = soup.find(id = 'enter the target id here')

print(id.text)To do this for a class name, replace the id with class. However, writing class directly results in syntax confusion as Python see it as a keyword. To bypass that error, you need to write an underscore in front of class like this: class_.

In essence, the line containing the id becomes:

my_classes = soup.find(class_ = 'enter the target class name here')

print(my_classes.text)However, you can also scrape a webpage by calling a particular tag name with its corresponding id or class:

data = soup.find_all('div', class_ = 'enter the target class name here')

print(data)Step 1: Installation

Beautiful Soup can be installed using the pip command. You can also try pip3 if pip is not working.

pip install requests

pip install beautifulsoup4

The requests module is used for getting HTML content.

Step 2: Inspect the Source

The next step is to inspect the website that you want to scrape. Start by opening your site in a browser. Go through the structure of the site and find the part of the webpage you want to scrape. Next, inspect the site using the developer tools by going to More tools>Developer tools. Finally, open the Elements tab in your developer tools.

Step 3: Get the HTML Content

Next, get the HTML content from a web page. We use the requests module for doing this task. We call the “get” function by passing the URL of the webpage as an argument to this function as shown below:

#import requests library

import requests

#the website URL

url_link = "https://en.wikipedia.org/wiki/List_of_states_and_territories_of_the_United_States"

result = requests.get(url_link).text

print(result)In the above code, we are issuing an HTTP GET request to the specified URL. We are then storing the HTML data that is received by the server in a Python object. The .text attribute will print the HTML data.

Step 4: Parsing an HTML Page with Beautiful Soup

Now that we have the HTML content in a document, the next step is to parse and process the data. For doing so, we import this library, create an instance of BeautifulSoup class and process the data.

from bs4 import BeautifulSoup

#import requests library

import requests

#the website URL

url_link = "https://en.wikipedia.org/wiki/List_of_states_and_territories_of_the_United_States"

result = requests.get(url_link).text

doc = BeautifulSoup(result, "html.parser")print(doc.prettify())The prettify() function will allow us to print the HTML content in a nested form that is easy to read and will help extract the available tags that are needed.

There are two methods to find the tags: find and find_all().

Find(): This method finds the first matched element.

Find_all(): This method finds all the matched elements.

Find Elements by ID:

We all know that every element of the HTML page is assigned a unique ID attribute. Let us now try to find an element by using the value of the ID attribute. For example, I am looking to find an ID attribute that has the value “content” as shown below:

res = doc.find(id = "content")

print(res)There are many more methods and attributes that you can use with BeautifulSoup to scrape data from web pages.

You can refer to the documentation for more details: https://www.crummy.com/software/BeautifulSoup/bs4/doc/

Beautiful Soup: Build a Web Scraper With Python — Real Python

Beautiful Soup Documentation — Beautiful Soup 4.4.0 documentation (beautiful-soup-4.readthedocs.io)

I hope this blog post was helpful and informative for you. If you have any questions or feedback, please leave a comment below. Happy scraping!